微调

每个模型的月存储费用为 2 美元。有关更详细的定价信息,请访问我们的定价页面。

介绍

微调是一种强大的技术,用于为特定用例定制和优化大型语言模型 (LLM) 的性能。通过在与特定任务相关的标注数据集上进一步训练预训练的 LLM,微调可以提高模型的性能。这可以用于处理复杂或差异较大的任务,也可以用于训练较小的模型以匹配较大模型的性能,从而可能带来延迟和成本效益。性能提升因用例而异。

Mistral AI 通过 La Plateforme 提供微调 API,可以轻松微调我们所有的开源和商业模型。对于有兴趣试验不同参数并更深入了解微调过程的用户,我们还提供对我们的开源代码库 mistral-finetune 的访问。然而,我们建议使用我们的 API 进行所有微调,因为它不需要 GPU,提供多种选项,并且还支持对我们的商业模型进行微调。

在本指南中,我们将涵盖以下主题

- 准备数据集

- 使用 Mistral API 的端到端示例

- 使用

mistral-finetune的端到端示例

准备数据集

一旦您确定微调是解决特定用例的最佳方法,最初也是最关键的步骤就是收集和准备用于模型微调的训练数据。以下是您可能会发现有用的六个具体用例

用例

用例 1:特定语气

微调对于在对话中建立特定语气很有用。例如,我们可以创建一个反映《哈利·波特》系列中邓布利多教授语气的数据集。典型的用户/助手交流如下所示

{

"messages": [

{

"role": "user",

"content": "How are you?"

},

{

"role": "assistant",

"content": "Ah, my dear friend, I am as well as one can be, surrounded by magic, the twinkling stars, and the whispers of ancient knowledge. Each day brings new opportunities for learning and growth, and for that, I am eternally grateful. How may I assist you on this fine day?"

}

]

}

为了创建具有这种语气的数据集,我们从 Mistral-Large 模型生成了角色描述,然后将这些描述添加到系统提示中。通过对该数据集进行微调,我们可以达到所需的结果,而无需在每次对话中显式提示角色描述。

from mistralai import Mistral

import os

api_key = os.environ.get("MISTRAL_API_KEY")

def run_mistral(sys_message, user_message, model="mistral-large-latest"):

client = Mistral(api_key=api_key)

messages = [

{

"role": "system",

"content": sys_message

},

{

"role": "user",

"content": user_message

}

]

chat_response = client.chat.complete(

model=model,

messages=messages

)

return chat_response.choices[0].message.content

sys_message = """

You are Albus Dumbledore. You are the headmaster of Hogwarts School of Witchcraft and

Wizardry and are widely regarded as one of the most powerful and knowledgeable wizards

of all time. You are known for your eccentricities, such as your love of sweets and

your quirky sense of humor. You often using riddles, metaphors, and wise sayings to

convey your thoughts and teach important lessons. Despite your gentle demeanor, you

can be firm and assertive when necessary, and your words often carry a great deal of

weight and authority. Respond succinctly in Dumbledore's distinctive tone.

"""

resp = run_mistral(sys_message, "How are you?")

print(resp)

多轮对话

您的示例也可以包含多轮对话,其中包含多个用户和助手消息。

{

"messages": [

{

"role": "user",

"content": "How are you?"

},

{

"role": "assistant",

"content": "Ah, my dear friend, I am as well as one can be, surrounded by magic, the twinkling stars, and the whispers of ancient knowledge. Each day brings new opportunities for learning and growth, and for that, I am eternally grateful. How may I assist you on this fine day?"

},

{

"role": "user",

"content": "I'm concerned about Harry."

},

{

"role": "assistant",

"content": "Ah, my dear child, worry is but a shadow that darkens the heart. Yet, I understand your concern for young Harry. His journey is indeed fraught with challenges. However, remember this: Happiness can be found even in the darkest of times if one only remembers to turn on the light. Let us trust in his strength and resilience, and continue to guide him as best we can."

}

]

}

用例 2:特定格式

微调模型对于生成特定格式的输出非常有利,因为它允许模型从包含所需格式示例的标注数据集中学习所需的输出格式。这种训练有助于模型生成符合该格式的输出。

例如,假设您想从医疗记录中提取医疗信息。让我们使用 medical_knowledge_from_extracts 数据集,其中所需的输出格式是一个 JSON 对象,包含

条件 (conditions),干预措施(interventions),可进一步分类为行为 (behavioral)、药物 (drugs) 和其他 (other) 类型。输出示例如下

{

"conditions": "Proteinuria",

"interventions": "Drug: Losartan Potassium|Other: Comparator: Placebo (Losartan)|Drug: Comparator: amlodipine besylate|Other: Comparator: Placebo (amlodipine besylate)|Other: Placebo (Losartan)|Drug: Enalapril Maleate"

}

在此数据集上微调预训练模型可以帮助它学习生成这种特定格式的输出。

以下 Python 代码展示了如何加载此数据,将其格式化为所需的格式并保存为 .jsonl 文件。您还可以考虑随机化顺序并将数据划分为单独的训练和验证文件,以便根据您的用例进行进一步的数据处理。

import pandas as pd

import json

df = pd.read_csv(

"https://hugging-face.cn/datasets/owkin/medical_knowledge_from_extracts/raw/main/finetuning_train.csv"

)

df_formatted = [

{

"messages": [

{"role": "user", "content": row["Question"]},

{"role": "assistant", "content": row["Answer"]},

]

}

for index, row in df.iterrows()

]

with open("data.jsonl", "w") as f:

for line in df_formatted:

json.dump(line, f)

f.write("\n")

以下是一个数据实例的示例

{

"messages": [

{

"role": "user",

"content": "Your goal is to extract structured information from the user's input that matches the form described below. When extracting information please make sure it matches the type information exactly...Input: DETAILED_MEDICAL_NOTES"

},

{

"role": "assistant",

"content": "{'conditions': 'Proteinuria', 'interventions': 'Drug: Losartan Potassium|Other: Comparator: Placebo (Losartan)|Drug: Comparator: amlodipine besylate|Other: Comparator: Placebo (amlodipine besylate)|Other: Placebo (Losartan)|Drug: Enalapril Maleate'}"

}

]

}

在此示例中,提示仍然包含相当复杂的指令。我们可以在没有复杂提示词的情况下微调数据集上的模型。用户内容可以是医疗记录,而无需任何指令。微调后的模型可以直接从医疗记录中学习生成特定格式的输出。让我们只使用医疗记录作为用户消息

import pandas as pd

import json

df = pd.read_csv(

"https://hugging-face.cn/datasets/owkin/medical_knowledge_from_extracts/raw/main/finetuning_train.csv"

)

df_formatted = [

{

"messages": [

{"role": "user", "content": row["Question"].split("Input:")[1]},

{"role": "assistant", "content": row["Answer"]},

]

}

for index, row in df.iterrows()

]

with open("data.jsonl", "w") as f:

for line in df_formatted:

json.dump(line, f)

f.write("\n")

以下是一个数据实例的示例

{

"messages": [

{

"role": "user",

"content": "DETAILED_MEDICAL_NOTES"

},

{

"role": "assistant",

"content": "{'conditions': 'Proteinuria', 'interventions': 'Drug: Losartan Potassium|Other: Comparator: Placebo (Losartan)|Drug: Comparator: amlodipine besylate|Other: Comparator: Placebo (amlodipine besylate)|Other: Placebo (Losartan)|Drug: Enalapril Maleate'}"

}

]

}

用例 3:特定风格

您可以针对特定风格进行微调。例如,以下是如何使用 mistral-large 生成“新闻文章风格师”的微调数据集,该风格师遵循风格指南来润色和重写新闻文章。

过程很简单。首先,使用一些指南,我们要求模型评估文章数据集,并对可能的改进提供评论。然后,完成后,我们要求模型重写这些文章,同时考虑反馈,如下所示

def process_refined_news(args):

line, system, instruction = args

record = json.loads(line)

news_article = record.get("news")

critique= record.get("critique")

status = record.get("status")

time.sleep(1)

try:

if status == "SUCCESS":

answer = CLIENT.chat.complete(

model="mistral-large-latest",

messages= [

{"role": "system", "content": system},

{"role": "user", "content": news_article},

{"role": "assistant", "content": critique},

{"role": "user", "content": instruction},

],

temperature=0.2,

max_tokens=2048

)

new_news = answer.choices[0].message.content

result = json.dumps({"news": news_article, "critique": critique, "refined_news": new_news, "status": "SUCCESS"})

else:

result = json.dumps({"news": news_article, "critique": critique, "refined_news": critique, "status": "ERROR"})

except Exception as e:

result = json.dumps({"news": news_article, "critique": critique, "refined_news": str(e), "status": "ERROR"})

random_hash = secrets.token_hex(4)

with open(f"./data/refined_news_{random_hash}.jsonl", "w") as f:

f.write(result)

return result

system = "Polish and restructure the news articles to align them with the high standards of clarity, accuracy, and elegance set by the style guide. You are presented with a news article. Identify the ten (or fewer) most significant stylistic concerns and provide examples of how they can be enhanced."

instruction = """

Now, I want you to incorporate the feedback and critiques into the news article and respond with the enhanced version, focusing solely on stylistic improvements without altering the content.

You must provide the entire article enhanced.

Do not make ANY comments, only provide the new article improved.

Do not tell me what you changed, only provide the new article taking into consideration the feedback you provided.

The new article needs to have all the content of the original article but with the feedback into account.

"""

data_path = "./generated_news_critiques.jsonl"

with open(data_path, "r") as f:

lines = f.readlines()

lines = [(line, system, instruction) for line in lines]

results = process_map(process_refined_news, lines, max_workers=20, chunksize=1)

with open("./generated_refined_news.jsonl", "w") as f:

for result in results:

f.write(result + "\n")

完整的笔记本可以在这里找到

用例 4:编码

微调是一种高度有效的方法,可用于将预训练模型定制到特定领域任务,例如从自然语言文本生成 SQL 查询。通过在相关数据集上对模型进行微调,它可以学习该任务独有的新特征和模式。例如,在 text-to-SQL 集成的情况下,我们可以使用包含 SQL 问题以及 SQL 表上下文的 sql-create-context 来训练模型输出正确的 SQL 语法。

为了格式化数据用于微调,我们可以使用 Python 代码将输入和输出数据预处理为适合模型的格式。以下是 text-to-SQL 生成数据格式化的示例

import pandas as pd

import json

df = pd.read_json(

"https://hugging-face.cn/datasets/b-mc2/sql-create-context/resolve/main/sql_create_context_v4.json"

)

df_formatted = [

{

"messages": [

{

"role": "user",

"content": f"""

You are a powerful text-to-SQL model. Your job is to answer questions about a database. You are given a question and context regarding one or more tables.

You must output the SQL query that answers the question.

### Input:

{row["question"]}

### Context:

{row["context"]}

### Response:

""",

},

{"role": "assistant", "content": row["answer"]},

]

}

for index, row in df.iterrows()

]

with open("data.jsonl", "w") as f:

for line in df_formatted:

json.dump(line, f)

f.write("\n")

以下是格式化数据的示例

{

"messages": [

{

"role": "user",

"content": "\n You are a powerful text-to-SQL model. Your job is to answer questions about a database. You are given a question and context regarding one or more tables. \n\n You must output the SQL query that answers the question.\n \n ### Input:\n How many heads of the departments are older than 56 ?\n \n ### Context:\n CREATE TABLE head (age INTEGER)\n \n ### Response:\n "

},

{

"role": "assistant",

"content": "SELECT COUNT(*) FROM head WHERE age > 56"

}

]

}

用例 5:RAG 中的领域特定增强

微调可以改善标准 RAG 工作流中的问答性能。例如,这项研究通过使用微调的嵌入模型和微调的 LLM 展示了更高的 RAG 性能。另一项研究引入了检索增强微调 (RAFT),该方法微调 LLM,使其不仅能根据相关文档回答问题,还能忽略不相关的文档,从而显着提高了所有专业领域的 RAG 性能。

一般来说,为了生成用于 RAG 的微调数据集,我们从 context 开始,这是您感兴趣的文档的原始文本。根据 context,您可以生成 questions 和 answers,以获得查询-上下文-答案三元组。以下是用于生成问题和答案的两个提示模板

-

根据上下文生成问题的提示模板

Context information is below.

---------------------

{context_str}

---------------------

Given the context information and not prior knowledge. Generate {num_questions_per_chunk}

questions based on the context. The questions should be diverse in nature across the

document. Restrict the questions to the context information provided. -

根据上下文和上一个提示模板生成的问题生成答案的提示模板

Context information is below

---------------------

{context_str}

---------------------

Given the context information and not prior knowledge,

answer the query.

Query: {generated_query_str}

Answer:

用例 6:知识迁移

微调的重要用例之一是大型模型的知识蒸馏。知识蒸馏是一个过程,涉及将由更大、更复杂的模型(称为教师模型)学到的知识转移到更小、更简单的模型(称为学生模型)。微调在此过程中起着至关重要的作用,因为它使学生模型能够从教师模型的输出中学习并相应地调整其权重。

假设我们有一些需要标注的医疗记录数据。在现实场景中,我们通常没有标注的真实值。例如,让我们考虑我们在用例 2 中使用的 medical_knowledge_from_extracts 数据集中的医疗记录。假设我们没有标注的验证真实值。在这种情况下,我们可以利用旗舰模型 Mistral-Large 来创建标注,因为它能生成更可靠和准确的结果。随后,我们可以使用 Mistral-Large 生成的输出对较小的模型进行微调。

下面的 Python 函数加载我们的数据集并从 Mistral-Large 生成标注(在助手消息中)

from mistralai import Mistral

import pandas as pd

import json

import os

api_key = os.environ.get("MISTRAL_API_KEY")

def run_mistral(user_message, model="mistral-large-latest"):

client = Mistral(api_key=api_key)

messages = [

{

"role": "user",

"content": user_message

}

]

chat_response = client.chat.complete(

model=model, response_format={"type": "json_object"}, messages=messages

)

return chat_response.choices[0].message.content

# load dataset and select top 10 rows as an example

df = pd.read_csv(

"https://hugging-face.cn/datasets/owkin/medical_knowledge_from_extracts/resolve/main/finetuning_train.csv"

).head(10)

# use Mistral Large to provide output

df_formatted = [

{

"messages": [

{"role": "user", "content": row["Question"].split("Input:")[1]},

{"role": "assistant", "content": run_mistral(row["Question"])},

]

}

for index, row in df.iterrows()

]

with open("data.jsonl", "w") as f:

for line in df_formatted:

json.dump(line, f)

f.write("\n")

以下是一个数据实例的示例

{

"messages": [

{

"role": "user",

"content": "Randomized trial of the effect of an integrative medicine approach to the management of asthma in adults on disease-related quality of life and pulmonary function. The purpose of this study was to test the effectiveness of an integrative medicine approach to the management of asthma compared to standard clinical care on quality of life (QOL) and clinical outcomes. This was a prospective parallel group repeated measurement randomized design. Participants were adults aged 18 to 80 years with asthma. The intervention consisted of six group sessions on the use of nutritional manipulation, yoga techniques, and journaling. Participants also received nutritional supplements: fish oil, vitamin C, and a standardized hops extract. The control group received usual care. Primary outcome measures were the Asthma Quality of Life Questionnaire (AQLQ), The Medical Outcomes Study Short Form-12 (SF-12), and standard pulmonary function tests (PFTs). In total, 154 patients were randomized and included in the intention-to-treat analysis (77 control, 77 treatment). Treatment participants showed greater improvement than controls at 6 months for the AQLQ total score (P<.001) and for three subscales, Activity (P< 0.001), Symptoms (P= .02), and Emotion (P<.001). Treatment participants also showed greater improvement than controls on three of the SF-12 subscales, Physical functioning (P=.003); Role limitations, Physical (P< .001); and Social functioning (P= 0.03), as well as in the aggregate scores for Physical and Mental health (P= .003 and .02, respectively). There was no change in PFTs in either group. A low-cost group-oriented integrative medicine intervention can lead to significant improvement in QOL in adults with asthma. Output:"

},

{

"role": "assistant",

"content": "{\"conditions\": \"asthma\", \"drug_or_intervention\": \"integrative medicine approach with nutritional manipulation, yoga techniques, journaling, fish oil, vitamin C, and a standardized hops extract\"}"

}

]

}

用例 7:用于函数调用的代理

在决定采取哪些行动和使用哪些工具时,微调在塑造代理的推理和决策过程中起着关键作用。事实上,Mistral 的函数调用能力是通过对函数调用数据进行微调实现的。然而,在某些情况下,原生的函数调用能力可能不足,尤其是在处理特定工具和领域时。在这种情况下,必须考虑使用您自己的代理数据进行函数调用进行微调。通过使用您自己的数据进行微调,您可以显着提高代理的性能和准确性,使其能够选择正确的工具和行动。

这是一个简单的示例,旨在训练模型在需要时调用 generate_anagram() 函数。对于更复杂的用例,您可以将 tools 列表扩展到 100 个或更多函数,并创建各种示例,演示在不同时间调用不同的函数。这种方法使模型能够学习更广泛的功能,并理解每个函数的适当使用上下文。

{

"messages": [

{

"role": "system",

"content": "You are a helpful assistant with access to the following functions to help the user. You can use the functions if needed."

},

{

"role": "user",

"content": "Can you help me generate an anagram of the word 'listen'?"

},

{

"role": "assistant",

"tool_calls": [

{

"id": "TX92Jm8Zi",

"type": "function",

"function": {

"name": "generate_anagram",

"arguments": "{\"word\": \"listen\"}"

}

}

]

},

{

"role": "tool",

"content": "{\"anagram\": \"silent\"}",

"tool_call_id": "TX92Jm8Zi"

},

{

"role": "assistant",

"content": "The anagram of the word 'listen' is 'silent'."

},

{

"role": "user",

"content": "That's amazing! Can you generate an anagram for the word 'race'?"

},

{

"role": "assistant",

"tool_calls": [

{

"id": "3XhQnxLsT",

"type": "function",

"function": {

"name": "generate_anagram",

"arguments": "{\"word\": \"race\"}"

}

}

]

}

],

"tools": [

{

"type": "function",

"function": {

"name": "generate_anagram",

"description": "Generate an anagram of a given word",

"parameters": {

"type": "object",

"properties": {

"word": {

"type": "string",

"description": "The word to generate an anagram of"

}

},

"required": ["word"]

}

}

}

]

}

使用 Mistral API 的端到端示例

您可以通过 Mistral API 微调所有 Mistral 模型。使用 Mistral 的微调 API 按照以下步骤操作。

准备数据集

在此示例中,让我们使用 ultrachat_200k 数据集。我们将一部分数据加载到 Pandas Dataframes 中,将数据分割为训练集和验证集,并将数据保存为微调所需的 jsonl 格式。

import pandas as pd

df = pd.read_parquet('https://hugging-face.cn/datasets/HuggingFaceH4/ultrachat_200k/resolve/main/data/test_gen-00000-of-00001-3d4cd8309148a71f.parquet')

df_train=df.sample(frac=0.995,random_state=200)

df_eval=df.drop(df_train.index)

df_train.to_json("ultrachat_chunk_train.jsonl", orient="records", lines=True)

df_eval.to_json("ultrachat_chunk_eval.jsonl", orient="records", lines=True)

重新格式化数据集

如果您将此 ultrachat_chunk_train.jsonl 上传到 Mistral API,您可能会遇到“Invalid file format”错误消息,这是由于数据格式问题造成的。要将数据重新格式化为正确的格式,您可以下载 reformat_data.py 脚本并使用它来验证和重新格式化训练数据和评估数据

# download the validation and reformat script

wget https://raw.githubusercontent.com/mistralai/mistral-finetune/main/utils/reformat_data.py

# validate and reformat the training data

python reformat_data.py ultrachat_chunk_train.jsonl

# validate the reformat the eval data

python reformat_data.py ultrachat_chunk_eval.jsonl

此 reformat_data.py 脚本是为 UltraChat 数据量身定制的,可能不适用于其他数据集。请相应地修改此脚本并重新格式化您的数据。

运行脚本后,从训练数据中删除了少数几个用例。

Skip 3674th sample

Skip 9176th sample

Skip 10559th sample

Skip 13293th sample

Skip 13973th sample

Skip 15219th sample

让我们检查其中一个用例。此用例存在两个问题

- 其中一个助手消息是空字符串;

- 最后一条消息不是助手消息。

上传数据集

然后我们可以将训练数据和评估数据都上传到 Mistral Client,使其可用于微调作业。

- python

- typescript

- curl

from mistralai import Mistral

import os

api_key = os.environ["MISTRAL_API_KEY"]

client = Mistral(api_key=api_key)

ultrachat_chunk_train = client.files.upload(file={

"file_name": "ultrachat_chunk_train.jsonl",

"content": open("ultrachat_chunk_train.jsonl", "rb"),

})

ultrachat_chunk_eval = client.files.upload(file={

"file_name": "ultrachat_chunk_eval.jsonl",

"content": open("ultrachat_chunk_eval.jsonl", "rb"),

})

import MistralClient from '@mistralai/mistralai';

const apiKey = process.env.MISTRAL_API_KEY;

const client = new MistralClient(apiKey);

const file = fs.readFileSync('ultrachat_chunk_train.jsonl');

const ultrachat_chunk_train = await client.files.create({ file });

const file = fs.readFileSync('ultrachat_chunk_eval.jsonl');

const ultrachat_chunk_eval = await client.files.create({ file });

curl https://api.mistral.ai/v1/files \

-H "Authorization: Bearer $MISTRAL_API_KEY" \

-F purpose="fine-tune" \

-F file="@ultrachat_chunk_train.jsonl"

curl https://api.mistral.ai/v1/files \

-H "Authorization: Bearer $MISTRAL_API_KEY" \

-F purpose="fine-tune" \

-F file="@ultrachat_chunk_eval.jsonl"

示例输出

请注意,下一步需要文件 ID。

{

"id": "66f96d02-8b51-4c76-a5ac-a78e28b2584f",

"object": "file",

"bytes": 140893645,

"created_at": 1717164199,

"filename": "ultrachat_chunk_train.jsonl",

"purpose": "fine-tune"

}

{

"id": "84482011-dfe9-4245-9103-d28b6aef30d4",

"object": "file",

"bytes": 7247934,

"created_at": 1717164200,

"filename": "ultrachat_chunk_eval.jsonl",

"purpose": "fine-tune"

}

创建微调作业

接下来,我们可以创建一个微调作业

- python

- typescript

- curl

# create a fine-tuning job

created_jobs = client.fine_tuning.jobs.create(

model="open-mistral-7b",

training_files=[{"file_id": ultrachat_chunk_train.id, "weight": 1}],

validation_files=[ultrachat_chunk_eval.id],

hyperparameters={

"training_steps": 10,

"learning_rate":0.0001

},

auto_start=False

)

# start a fine-tuning job

client.fine_tuning.jobs.start(job_id = created_jobs.id)

created_jobs

const createdJob = await client.jobs.create({

model: 'open-mistral-7b',

trainingFiles: [ultrachat_chunk_train.id],

validationFiles: [ultrachat_chunk_eval.id],

hyperparameters: {

trainingSteps: 10,

learningRate: 0.0001,

},

});

curl https://api.mistral.ai/v1/fine_tuning/jobs \

--header "Authorization: Bearer $MISTRAL_API_KEY" \

--header 'Content-Type: application/json' \

--header 'Accept: application/json' \

--data '{

"model": "open-mistral-7b",

"training_files": [

"<uuid>"

],

"validation_files": [

"<uuid>"

],

"hyperparameters": {

"training_steps": 10,

"learning_rate": 0.0001

}

}'

示例输出

{

"id": "25d7efe6-6303-474f-9739-21fb0fccd469",

"hyperparameters": {

"training_steps": 10,

"learning_rate": 0.0001

},

"fine_tuned_model": null,

"model": "open-mistral-7b",

"status": "QUEUED",

"job_type": "FT",

"created_at": 1717170356,

"modified_at": 1717170357,

"training_files": [

"66f96d02-8b51-4c76-a5ac-a78e28b2584f"

],

"validation_files": [

"84482011-dfe9-4245-9103-d28b6aef30d4"

],

"object": "job",

"integrations": []

}

分析和评估微调模型

当我们检索模型时,每完成 10% 的进度,并且间隔至少 10 步,我们都会获得以下指标

- 训练损失:模型在训练数据上的误差,表明模型从训练集中学习的情况。

- 验证损失:模型在验证数据上的误差,提供了模型泛化到未见数据情况的洞察。

- 验证 token 准确率:模型在验证集中正确预测的 token 百分比。

验证损失和验证 token 准确率都是模型整体性能的重要指标,有助于评估其泛化能力和在新数据上进行准确预测的能力。

- python

- typescript

- curl

# Retrieve a jobs

retrieved_jobs = client.fine_tuning.jobs.get(job_id = created_jobs.id)

print(retrieved_jobs)

// Retrieve a job

const retrievedJob = await client.jobs.retrieve({ jobId: createdJob.id });

# Retrieve a job

curl https://api.mistral.ai/v1/fine_tuning/jobs/<jobid> \

--header "Authorization: Bearer $MISTRAL_API_KEY" \

--header 'Content-Type: application/json'

运行 100 步时的示例输出

{

"id": "2813b7e6-c511-43ac-a16a-1a54a5b884b2",

"hyperparameters": {

"training_steps": 100,

"learning_rate": 0.0001

},

"fine_tuned_model": "ft:open-mistral-7b:57d37e6c:20240531:2813b7e6",

"model": "open-mistral-7b",

"status": "SUCCESS",

"job_type": "FT",

"created_at": 1717172592,

"modified_at": 1717173491,

"training_files": [

"66f96d02-8b51-4c76-a5ac-a78e28b2584f"

],

"validation_files": [

"84482011-dfe9-4245-9103-d28b6aef30d4"

],

"object": "job",

"integrations": [],

"events": [

{

"name": "status-updated",

"data": {

"status": "SUCCESS"

},

"created_at": 1717173491

},

{

"name": "status-updated",

"data": {

"status": "RUNNING"

},

"created_at": 1717172594

},

{

"name": "status-updated",

"data": {

"status": "QUEUED"

},

"created_at": 1717172592

}

],

"checkpoints": [

{

"metrics": {

"train_loss": 0.816135,

"valid_loss": 0.819697,

"valid_mean_token_accuracy": 1.765035

},

"step_number": 100,

"created_at": 1717173470

},

{

"metrics": {

"train_loss": 0.84643,

"valid_loss": 0.819768,

"valid_mean_token_accuracy": 1.765122

},

"step_number": 90,

"created_at": 1717173388

},

{

"metrics": {

"train_loss": 0.816602,

"valid_loss": 0.820234,

"valid_mean_token_accuracy": 1.765692

},

"step_number": 80,

"created_at": 1717173303

},

{

"metrics": {

"train_loss": 0.775537,

"valid_loss": 0.821105,

"valid_mean_token_accuracy": 1.766759

},

"step_number": 70,

"created_at": 1717173217

},

{

"metrics": {

"train_loss": 0.840297,

"valid_loss": 0.822249,

"valid_mean_token_accuracy": 1.76816

},

"step_number": 60,

"created_at": 1717173131

},

{

"metrics": {

"train_loss": 0.823884,

"valid_loss": 0.824598,

"valid_mean_token_accuracy": 1.771041

},

"step_number": 50,

"created_at": 1717173045

},

{

"metrics": {

"train_loss": 0.786473,

"valid_loss": 0.827982,

"valid_mean_token_accuracy": 1.775201

},

"step_number": 40,

"created_at": 1717172960

},

{

"metrics": {

"train_loss": 0.8704,

"valid_loss": 0.835169,

"valid_mean_token_accuracy": 1.784066

},

"step_number": 30,

"created_at": 1717172874

},

{

"metrics": {

"train_loss": 0.880803,

"valid_loss": 0.852521,

"valid_mean_token_accuracy": 1.805653

},

"step_number": 20,

"created_at": 1717172788

},

{

"metrics": {

"train_loss": 0.803578,

"valid_loss": 0.914257,

"valid_mean_token_accuracy": 1.884598

},

"step_number": 10,

"created_at": 1717172702

}

]

}

使用微调模型

微调作业完成后,您可以通过 retrieved_jobs.fine_tuned_model 查看微调模型的名称。然后您可以使用我们的 chat 端点与微调模型聊天

- python

- typescript

- curl

chat_response = client.chat.complete(

model = retrieved_jobs.fine_tuned_model,

messages = [{"role":'user', "content":'What is the best French cheese?'}]

)

const chatResponse = await client.chat({

model: retrievedJob.fine_tuned_model,

messages: [{role: 'user', content: 'What is the best French cheese?'}],

});

curl "https://api.mistral.ai/v1/chat/completions" \

--header 'Content-Type: application/json' \

--header 'Accept: application/json' \

--header "Authorization: Bearer $MISTRAL_API_KEY" \

--data '{

"model": "ft:open-mistral-7b:daf5e488:20240430:c1bed559",

"messages": [{"role": "user", "content": "Who is the most renowned French painter?"}]

}'

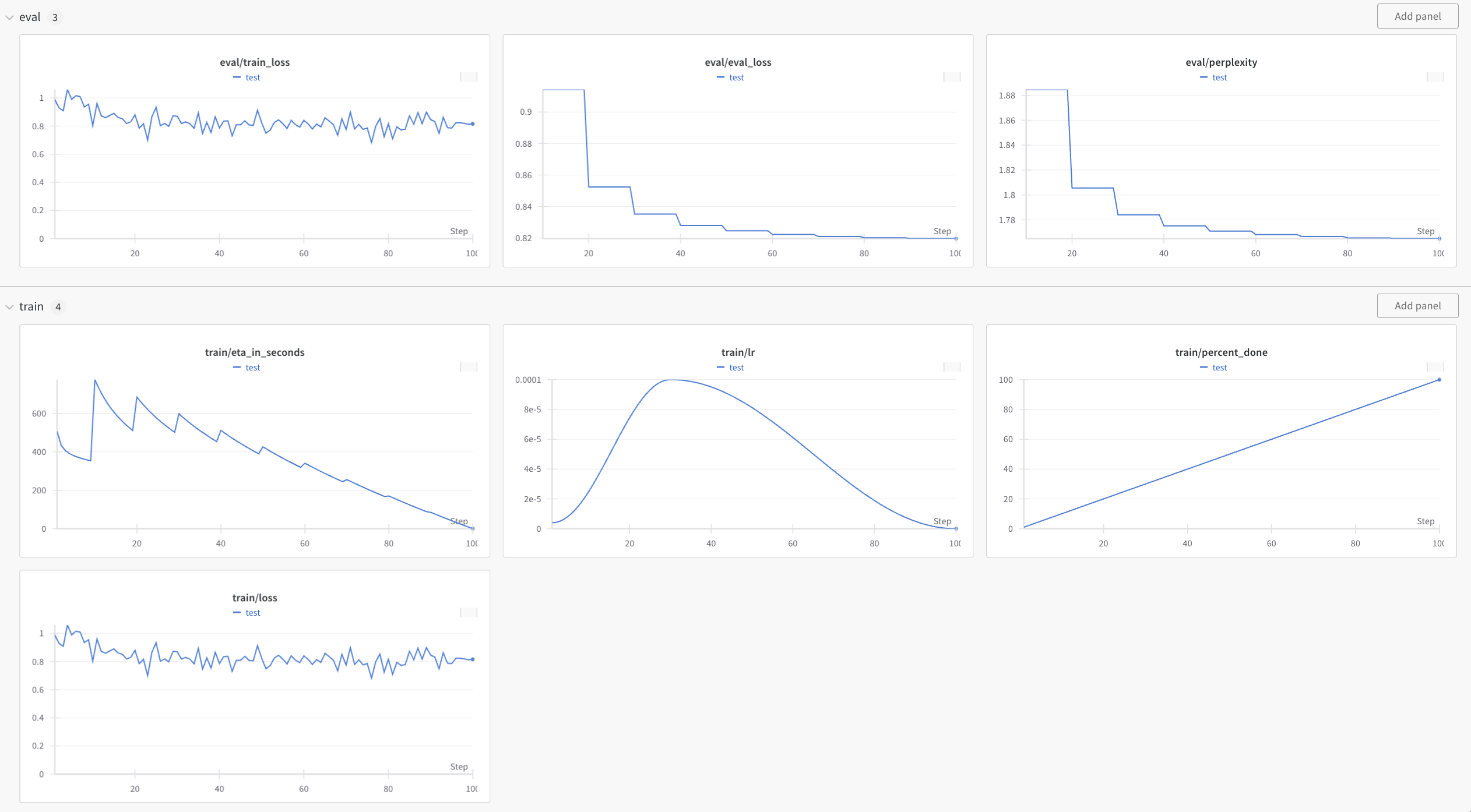

与 Weights and Biases 集成

我们还支持与 Weights & Biases (W&B) 集成,以监控和跟踪与我们的微调作业相关的各种指标和统计信息。要启用与 W&B 的集成,您需要创建一个 W&B 帐户,并在作业创建请求的“integrations”部分添加您的 W&B 信息

client.fine_tuning.jobs.create(

model="open-mistral-7b",

training_files=[{"file_id": ultrachat_chunk_train.id, "weight": 1}],

validation_files=[ultrachat_chunk_eval.id],

hyperparameters={"training_steps": 10, "learning_rate": 0.0001},

integrations=[

{

"project": "<value>",

"api_key": "<value>",

}

]

)

以下是显示我们的微调作业信息的 W&B 控制面板截图。

使用开源 mistral-finetune 的端到端示例

我们还开源了微调代码库 mistral-finetune,允许您微调 Mistral 的开源权重模型(Mistral 7B、Mixtral 8x7B、Mixtral 8x22B)。

要查看如何安装 mistral-finetune、准备和验证数据集、定义训练配置、使用 Mistral-LoRA 进行微调以及运行推理的端到端示例,请参阅 Mistral-finetune 仓库中提供的 README 文件:https://github.com/mistralai/mistral-finetune/tree/main 或按照此示例操作